Summary

- AI advancements, particularly in generative AI, hold the potential to revolutionize retail decision-making by efficiently processing vast amounts of data.

- Large Language Models (LLMs) offer solutions for processing text data at scale, addressing challenges like web crawling and data pipeline inefficiencies.

- LLMs enable automated parsing of HTML, improving data quality, scalability, and automation, ultimately empowering retail teams with better insights and competitive advantages.

- As Gen AI evolves, it promises further automation improvements beyond HTML parsing, offering retailers the opportunity to stay dynamic and competitive in the market.

Introduction

The rapid development of AI has led to new, exciting technologies with the potential to revolutionize the way we think and make decisions. This impact is being felt across retail. AI-based solutions can process and transform data at a global scale, allowing your team to better consume valuable insights and make informed decisions.

The latest technical leap forward is the advent of generative AI, which has led to the development of models capable of generating text, images, and other media, typically through Large Language Models (LLMs).

If leveraged appropriately, Gen AI offers new opportunities to develop and automate data processing systems, unlocking efficiencies and cost savings across multiple retail teams.

Gen AI: A New Solution to Working With “Big Data”

There are many possible applications of Gen AI in retail. The use of LLMs to intelligently process text data at scale is certainly one with a high potential reward for retail teams, and offers a new solution to working with “big data.”

“Big Data” in Retail

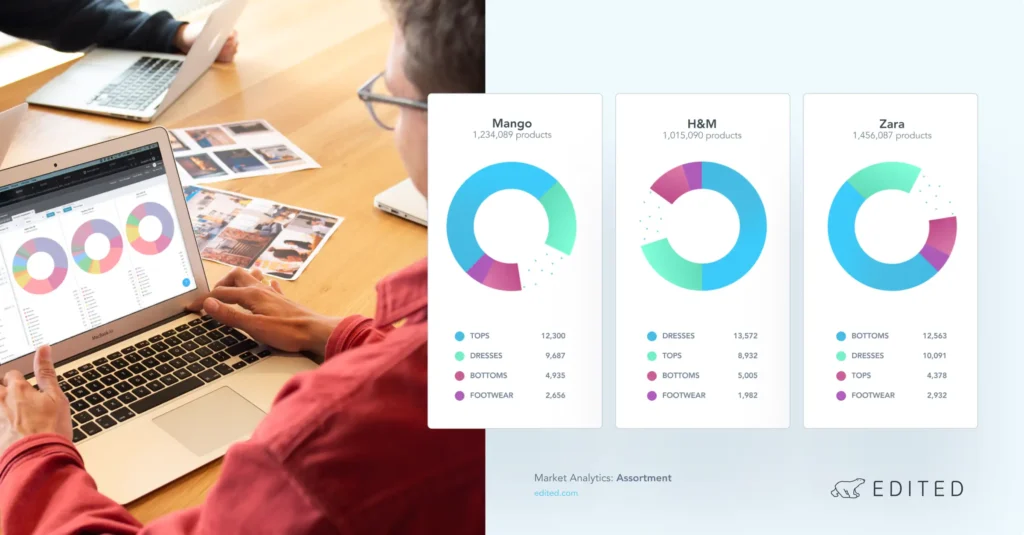

With retail markets operating on a global scale, analysis teams are exposed to hundreds of millions of products, each represented by highly time-dependent, interrelated data points.

The scale of this dynamic data pipeline represents a challenge to internal engineering teams of retail tech vendors, who are tasked with delivering a constant flow of up-to-date, accurate product insights.

A number of engineering processes within a data pipeline may be effectively static – unable to respond to real-time changes in an automated way and instead relying on human-in-the-loop input.

The end result?

- Inaccuracies in the processed data, which negatively impact the end users

- Slower response times to fix inaccuracies, which may not be immediately evident

- Decreased speed as projects scale with larger, more complex data pipelines (e.g. expanding to new markets)

Web Crawling in Retail

A prominent example of this problem in the retail industry concerns web crawling, where tech vendors have to dedicate considerable engineering resources to creating and updating crawling tools capable of collecting and parsing product information across a large number of websites.

Traditional web crawling has all the hallmarks of such a “big data” problem:

- Static engineering solutions in a dynamic environment

- Human intensive fixes

- Poor scaling to an increasing number of data sources

The base problem in web crawling can be split into two components: product discovery and product page parsing. Parsing typically consists of implementing a routine that processes a website page (in the form of a HTML script, or similar) to extract key features of interest, e.g. product details like price.

If the website is updated, however, the structure of the HTML is liable to change, and static solutions are likely to fail without manual fixes over time. The ideal situation, therefore, imagines a scenario where a fully automated approach can be applied to parsing routines, delivering faster and more accurate insights into product information for retail analysts.

With Gen AI, this may now be a step closer to reality.

A Gen AI Solution

Extracting information from HTML scripts with LLMs has been an area of active research in recent years, with LLMs able to “understand” both natural and programmatic languages.[1] LLMs, such as those available through OpenAI, have been trained on a huge corpus of text from across the internet, and are thus exposed to a wealth of shared information.

The power of LLMs lies in their flexibility in being applied to different problems. An LLM needs only a well-defined text prompt outlining its task to be ready to use.

What Does This Mean for Retailers?

Say we’re interested in tracking the price of products in the market, across multiple retailers’ websites. An LLM could be fed a block of text containing product information (e.g. an HTML script) and be tasked with extracting any specific output, including product prices.

A traditional hard-coded solution to this would be costly to scale to a large number of retailers, whereas an appropriate LLM could be applied to carry out this task on any number of web pages.

Such a dynamic solution could allow users to get ahead of the competition in exploring the breadth of the market and be more confident in delivering up-to-date, accurate measures of product pricing for their analysis teams.

This process can then scale to a whole number of different tasks beyond just pricing — almost any information on a retailer’s web page can now be understood and processed this way. A forward-thinking retail team leveraging this technology would then be in the best place to succeed in their market.

Overcoming Limitations

To extract the most value out of a Gen AI solution requires some clever design around the current limitations of this ever-improving technology. For instance, LLMs have a limited “context window,” which represents the maximum amount of text that the LLM can understand. This means that current LLMs typically cannot process an entire HTML script in one go, as the size of this input is too large.

Although chunks of text can be passed into the LLM individually, the LLM does not have the capacity to draw context between them, which could lead to spurious results if the relevant text appears in multiple places within the HTML, e.g. multiple product prices on one page.

Moreover, for all the power of LLMs, it is important to remember that they are not perfect solutions, and like any machine learning model, can make mistakes.

Without a proper system to limit and catch them, mistakes could lead to negative consequences throughout the remainder of the data pipeline, and, ultimately, affect the retail teams relying on high quality output.

Using Retail Technology

By partnering with a retail intelligence platform that is conducting specialized data science research, like EDITED, it may be possible to alleviate these concerns and build an optimized automated parsing method that limits costs and maximizes accuracy.

An ideal solution to this task would likely not take the form of a single LLM call, but rather a larger procedural pipeline, which acts to pre-process, analyze, and post-process HTML text.

To mitigate mistakes, an HTML document would need to be pre-processed to have as input only a chunk of HTML that is deemed relevant to the problem. One or multiple independent LLM calls could then handle the parsing of this chunk to extract the correct contextual information and run automated checks that this data is in the expected form.

Tech vendors can then make this more scalable by developing self-hosted, open-source LLM frameworks to work within their data pipeline. Together, these ideas form a conceptual automated parser, capable of delivering the high-value automation improvements to your retail team, as promised by Gen AI.

Benefits to Your Team

A Gen AI-driven approach to automated HTML parsing opens up multiple benefits to retail teams. These include:

- Improved data quality:

- An LLM parsing routine has the ability to respond to website changes, allowing it to effectively “self-heal,” unlike traditional hard-coded methods. This significantly reduces the risk of capturing incorrect data, or missing data altogether, meaning retail teams can make better-informed decisions in critical business areas, e.g. pricing and competition comparison.

- Quicker scaling:

- An effective LLM parser would easily output values from new HTML documents, making this approach useful for scaling quickly to ever-larger datasets, and unlocking information on new retailers and markets.

- More automation:

- LLMs automate manual tasks and reduce the need for a human-in-the-loop. Retail teams can then benefit from the resources this frees up, allowing for a renewed focus on the creation and improvement of products and services which your teams rely upon.

- Getting ahead of the competition:

- By leveraging cutting-edge technologies, such as those offered with Gen AI, retail teams can give themselves a competitive edge over those that stay static in a highly dynamic marketplace.

Conclusion

As we have seen, Gen AI offers the seeds from which more advanced, automated solutions can grow. If researched and applied appropriately, LLMs have the opportunity to drive automation improvements across retail, not just limited to this example of HTML parsing.

At EDITED, we understand the challenge of working with “big data,” given we ingest ~700 million products a week using web crawlers spanning global markets. As a company, we invest in a dedicated product ingestion team focused on the creation and upkeep of these processes, as well as cutting-edge data science research focused on improving internal processes. EDITED is continuing to develop new processes and products with Gen AI that will help lead the market in shaping innovation within retail.

Discover more about how EDITED’s commitment to AI research can help your team better consume valuable insights and make informed decisions by booking a meeting here.

Sources:

- Gur I. et al. 2022, https://arxiv.org/abs/2210.03945